Neo4j and the Ultimate Cypher Cheat Sheet for Data Scientist

- Sophie Yin

- Jan 8, 2022

- 5 min read

Updated: Jan 12, 2022

Here is the ultimate guide I have compiled for Cypher Queries that data scientists will be most interested in. This is mostly just in Cypher and does not use the Data Science Apps, please subscribe so you don't miss out on when I come out with the use of Data Science Apps.

Administrative

Login in stuff is used on Neo4j Desktop and also if you want to connect it to Python, Scala, or Java. I am also writing an article on how to use Neo4j in Scala, so please subscribe if you are interested!

:server disconnect

:help

:help cypher

//trick if you don't remember your login for external connection, //just create a new user

SHOW USERS

CREATE USER nameeee SET PASSWORD passworddd

DROP USER oldname

Creation

You can create different types of nodes or relationships together, with node label.

//Create new nodes and relationships, together or individually

CREATE (jkrowling:Person {name: "JK Rowling", born: 1965}),

(tomf:Person {name: "Tom Felton", born: 1987}),

(ruperg:Person {name: "Rupert Grint", born: 1988}),

(danielr:Person {name: "Daniel Radcliff"}),

(emmaw:Person {name: "Emma Watson", born: 1990}),

(alanr:Person {name: "Alan Rickman", born: 1947}),

(garyo:Person {name: "Gary Oldman", born: 1958}),

(hp1:Movie {title: "Philosopher's Stone", publication: 2001}),

(hp2:Movie {title: "Chamber of Secrets", publication: 2002}),

(hp3:Movie {title: "Prisoner of Azkaban", publication: 2003}),

(hp1)<-[reldan1: ACTED {role: "Harry Potter"}]-(danielr),

(emmaw)-[relem1: ACTED {role: "Hermione Granger"}]->(hp1),

(tomf)-[reltom1: ACTED {role: "Draco Malfoy"}]->(hp1),

(rupertg)-[relrup1: ACTED {role: "Ron Weasley"}]->(hp1),

(hp2)<-[reldan2: ACTED {role: "Harry Potter"}]-(danielr),

(emmaw)-[relem2: ACTED {role: "Hermione Granger"}]->(hp2),

(tomf)-[reltom2: ACTED {role: "Draco Malfoy"}]->(hp2),

(rupertg)-[relrup2: ACTED {role: "Ron Weasley"}]->(hp2),

(hp3)<-[reldan3: ACTED {role: "Harry Potter"}]-(danielr),

(emmaw)-[relem3: ACTED {role: "Hermione Granger"}]->(hp3),

(tomf)-[reltom3: ACTED {role: "Draco Malfoy"}]->(hp3),

(rupertg)-[relrup3: ACTED {role: "Ron Weasley"}]->(hp3),

(alanr)-[relal1: ACTED {role: "Severus Snape"}]->(hp1),

(alanr)-[relal2: ACTED {role: "Severus Snape"}]->(hp2),

(alanr)-[relal3: ACTED {role: "Severus Snape"}]->(hp3),

(garyo)-[relgar: ACTED {role: "Sirius Black"}]->(hp3),

(jkrowling)-[reljk1: WROTE]->(hp1),

(jkrowling)-[reljk2: WROTE]->(hp2),

(jkrowling)-[reljk3: WROTE]->(hp3)

//Create relationship between existing nodes

MATCH (p), (m)

WHERE p.name = "Gary Oldman" AND m.title = "Prisoner of Azkaban"

CREATE (p)-[:ACTED]->(m)Update

Update on existing nodes and relationships

//Update node properties

MATCH (node:Person {name: "Daniel Radcliff"})

SET node.name = "Daniel Radcliffe"

//Check and update

MATCH (node:Person)

WHERE node.name = "Daniel Radcliffe" AND node.born IS NULL

SET node.born 1989

//Update multiple node properties

MATCH (node:Person)

SET node+={name: "Daniel Radcliffe", born: 1987}

//MATCH (node:Person)

SET node = {name: "Daniel Radcliffe", born: 1989}

//Rename property

MATCH (node:Person)

WHERE node.birth IS NULL

SET node.birth = node.born

REMOVE node.bornMatch

Show and display nodes and relationships

//Single node label

MATCH (node:Person) RETURN node LIMIT 25

//Multiple node label

MATCH (node1:Person)

MATCH (node2:Movie) RETURN node1, node2 LIMIT 50

//Conditional with node properties

MATCH (node1:Person)

MATCH (node2:Movie)

WHERE node1.born > 1985

RETURN node1, node2

//Conditional with relationship label

MATCH rel =()-[r:ACTED]->()

RETURN rel LIMIT 25

//Conditional with relationship label and node properties

MATCH rel =(node1:Person)-[r:ACTED]->(node2:Movie)

WHERE node1.born > 1985

RETURN rel LIMIT 25

//Conditional with relationship label, node properties, and relationship property

MATCH rel =(node1:Person)-[r:ACTED]->(node2:Movie)

WHERE node1.born > 1985 AND r.role = "Draco Malfoy"

RETURN rel LIMIT 25

//Conditional query with newly created var to filter

MATCH (node1)-[r]->(node2)

WITH *, type(r) AS connectionType

RETURN node1.name, node2.name, connectionType

//Conditional with unwind to get subset

MATCH (node1: Person)-[:ACTED]-(node2: Movie)

WITH collect(node1) as p

UNWIND p as ll

return ll

//Unioned result with duplicates

MATCH (node:Person)

WHERE node.name = "Emma Watson"

RETURN node

UNION ALL

MATCH (node:Person)

WHERE node.name = "Rupert Grint"

RETURN node

//Unioned result without duplicates

MATCH (node:Person)

WHERE node.name = "Emma Watson"

RETURN node

UNION

MATCH (node:Person)

WHERE node.name = "Rupert Grint"

RETURN node

//alternatively you can use merge which is the combination of match //and create

MATCH (node1:Person { name: 'Daniel Radcliffe' }), (node2:Movie { name: 'Chamber of Secrets' })

MERGE (node1)-[rel: ACTED]->(node2)

RETURN rel //this first checks if the relationship exists, if it doesn't then it creates itDeletion

Deleting nodes, relationships, and properties

//Single node, must delete relationship first before deleting a //node

MATCH (node1)-[rel: ACTED]-()

WHERE node1.name = "Alan Rickman"

DELETE rel, node1

//Single relationship

MATCH (node1 {name: 'Alan Rickman'})-[rel: ACTED]->(hp1)

DELETE r

//Single node and all the connected relationships

MATCH (n {name: 'Alan Rickman'})

DETACH DELETE n

//All nodes and relationships

MATCH (n)

DETACH DELETE n

//Drop duplicates for a node to have

MATCH (node:Person)

WITH node.name AS name, COLLECT() AS branches

WHERE SIZE(branches) > 3

FOREACH ( IN branches | DETACH DELETE n )

//Check for duplicates on nodes

MATCH (p:Person)

WITH p.id as id, collect(p) AS nodes

WHERE size(nodes) > 1

RETURN [ n in nodes | n.id] AS ids, size(nodes)

ORDER BY size(nodes) DESC

LIMIT 10Constraints

Use constraints to check on your data to check if data quality and basic rules are satisfied, it will help when importing data into it, as it will check the constraints for you and enforce it

// Existence constraint on mandatory columns

CREATE CONSTRAINT ON (node: Person)

ASSET EXISTS (node.name)

// Uniqueness constraint on id

CREATE CONSTRAINT ON (node: Person)

ASSERT node.name IS UNIQUE

//Show all constraints

SHOW CONSTRAINT INFO

//Drop existence constraints

DROP CONSTRAINT ON (node: Person)

ASSERT EXISTS (node.name)

//Drop uniqueness constraint

DROP CONSTRAINT ON (node: Person)

ASSERT node.name IS UNIQUECommon Functions

Common functions of aggregation we use for SQL

//count of all nodes

MATCH (node)

RETURN COUNT(node)

//count of all relationships

MATCH ()-->()

RETURN COUNT(*)

//average of property

MATCH (node:Person)

RETURN AVG(node.born)

//you can use id, ceil, datetime, etc use CALL dbms.functions() to call on all functions available to you

//returns all unique labels

CALL db.labels()

//returns all unique relationships

CALL db.relationshipTypes()

//check how the nodes are related

CALL db.schema.visualization()General GDS call guide

The basic call for a GDS is split into a few sections:

CALL gds[.<tier>].<algorithm>.<execution-mode>[.<estimate>](

graphName: String,

configuration: Map

)

The tier is basically the functions' grade, whether they have passed the tests, but you are free to use any tier just be care of changes they make.

Tier | Description |

product-quality | Indicates that the algorithm has been tested with regards to stability and scalability. Algorithms in this tier are prefixed with gds.<algorithm> and are supported by Neo4j. |

beta | Indicates that the algorithm is a candidate for the production-quality tier. Algorithms in this tier are prefixed with gds.beta.<algorithm>. |

alpha | Indicates that the algorithm is experimental and might be changed or removed at any time. Algorithms in this tier are prefixed with gds.alpha.<algorithm>. |

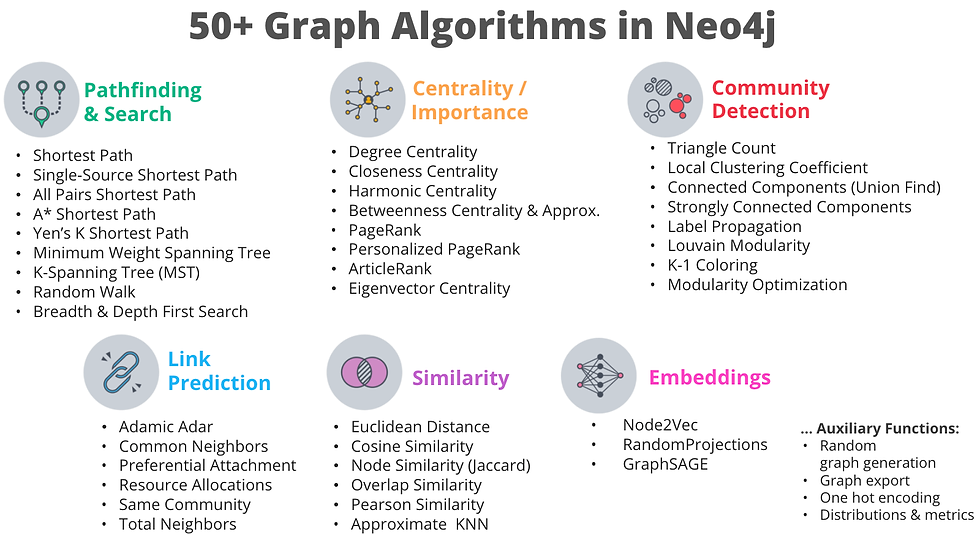

The algorithm is what the data scientists and data engineers are all waiting for. Here is the list Neo4j has put out for more information on each algorithm please go to Syntax overview - Neo4j Graph Data Science

The execution mode is basically trying to target the specific uses of your purpose for running the code. Usually writing out takes a long time, so by choosing wisely on the perhaps I assume you would be able to save some time.

Execution Mode | Description |

Stream | Returns the result of the algorithm as a stream of records. |

Stats | Returns a single record of summary statistics, but does not write to the Neo4j database. |

Mutate | Writes the results of the algorithm to the in-memory graph and returns a single record of summary statistics. This mode is designed for the named graph variant, as its effects will be invisible on an anonymous graph. |

Write | Writes the results of the algorithm to the Neo4j database and returns a single record of summary statistics. |

Graph Relevant Functions

Must have already downloaded the plugins

//create the graph you are going to analyze on, you can use subsets //of the graph you are interested

CALL gds.graph.create.cypher(

'HarryPotterSeries', //graphName

'MATCH (node) RETURN id(node) as id', //nodeQuery, neo4j works on node id not

'MATCH (node)-[rel]->(node2) RETURN id(node) AS source, id(node2) AS target, rel.weight AS weight' //relationshipQuery

)

// need to label source, target, and weight

//delete a graph

CALL gds.graph.drop('HarryPotterSeries') YIELD graphName

//list of graphs with it's descriptive information

CALL gds.graph.list()

//pagerank function

CALL gds.pageRank.stream('HarryPotterSeries', {

maxIterations: 20,

dampingFactor: 0.85,

relationshipWeightProperty: 'weight'

})

YIELD nodeId, score

RETURN gds.util.asNode(nodeId).id AS id, gds.util.asNode(nodeId).name as name, score as full_pagerank

ORDER BY full_pagerank DESC

//betweenness

CALL gds.betweenness.stream(

"HarryPotterSeries"

)

YIELD nodeId, score //default yield, don't have to specify

//degree centrality

CALL gds.degree.stream(

"HarryPotterSeries"

)

//node similarity

CALL gds.nodeSimilarity.stream("HarryPotterSeries")

//louvain

CALL gds.louvain.stream('HarryPotterSeries')Bulk Load Nodes and Relationships

This will be useful to add bunch of relationships and nodes at once to build a proper graph.

LOAD CSV WITH HEADERS FROM 'https://docs.google.com/spreadsheets/d/e/2PACX-1vQxtB5bngX8UoQgqY5Ubby4DWli8ryLW0UnDv0-PPzPnvO3ChoZ3ZuHYE1Hgc8mc98TlgQ2kZIO-4_N/pub?gid=0&single=true&output=csv' AS row1

MERGE (c:Companies {companyId: row1.Id, location: row1.Location})

LOAD CSV WITH HEADERS FROM 'https://docs.google.com/spreadsheets/d/e/2PACX-1vQxtB5bngX8UoQgqY5Ubby4DWli8ryLW0UnDv0-PPzPnvO3ChoZ3ZuHYE1Hgc8mc98TlgQ2kZIO-4_N/pub?gid=736102473&single=true&output=csv' AS row2

MERGE (e:Employees {employeeId: row2.Id, email: row2.Email})

LOAD CSV WITH HEADERS FROM 'https://docs.google.com/spreadsheets/d/e/2PACX-1vQxtB5bngX8UoQgqY5Ubby4DWli8ryLW0UnDv0-PPzPnvO3ChoZ3ZuHYE1Hgc8mc98TlgQ2kZIO-4_N/pub?gid=1931026342&single=true&output=csv' AS row3

MATCH (e:Employees {employeeId: row3.employeeId})

MATCH (c:Companies {companyId: row3.Company})

MERGE (e)-[:WORKS_FOR]->(c)

RETURN *

Comments